ENTERTAINMENT

Le Seraphim, Ive... K-pop girl groups targeted for 'deepfake sex crimes', why can't they root them out?

We delve into issues across the entertainment industry. Let's talk about the causes of controversy and issues, and what the voices of the entertainment industry are.

Due to deepfakes, a product of the development of artificial intelligence (AI) technology, K-pop girl groups such as groups Le Seraphim and Ive are becoming victims. Artificial intelligence model data, which was learned by stealing member photos, is spreading online and shocking.

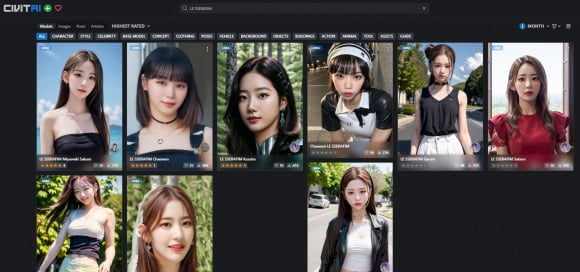

According to related industries on the 27th, a lot of data on which users learned images of members of popular K-pop girl groups has been uploaded to CivitAI, a generative artificial intelligence (AI) model sharing site.

The artificial intelligence model is called 'stable diffusion' and generates deepfake images. Deepfake is a compound word of 'deep learning' technology and 'fake'. Because it is based on artificial intelligence technology, it is difficult to distinguish between fake and real. In particular, 'Stable Diffusion' has a feature that ordinary people can use easily on their home PC.

As cases of abuse of the technology increased, such as the production of pornography using images of some idols, the National Assembly previously revised the 'Deepfake Punishment Act'. According to this bill, those who produce and distribute deepfake videos are punished with 'imprisonment for up to five years or a fine of up to 50 million won'. Distributing for-profit purposes is subject to aggravated punishment with 'imprisonment for up to 7 years'.

The production of pornography by deepfake has long emerged as a social problem. The level of punishment for the crime is increasing, but questions about practical prevention continue. The biggest problem is that in most cases, the production platform itself is an overseas server, making fundamental detection and punishment difficult.

In a situation in the shadow of a crime, entertainment industry officials are having difficulty coping with it. Although they are responding to digital sex crimes with civil and criminal complaints, it is rare that the perpetrators can actually be punished. Mr. A, an industry insider, said, "It is not easy for the agency to legally respond to digital sex crimes. We need real legal action."

In addition, there was an opinion that it was difficult for Enter to deal with the continuing digital sex crimes. Celebrity official B said, “There is a limit to how one agency can respond.

Reporter Yoon Jun-ho, Ten Asia delo410@tenasia.co.kr